Transboundary Research

Project from "Cybernetic Humanity"

人間と機械のハイブリッドシステムにおける互恵性

人間が操作する半自動運転の協調実験における

社会互恵性行動の形成と消滅

本研究は筆頭著者である白戸宏和(CMU)とChristakis, N(イェール大学)、ソニーCSLの研究者である笠原俊一の共同プロジェクトです。笠原研究員はCybernetic Humanityの研究の一環として、このプロジェクトを実施しています。

要旨

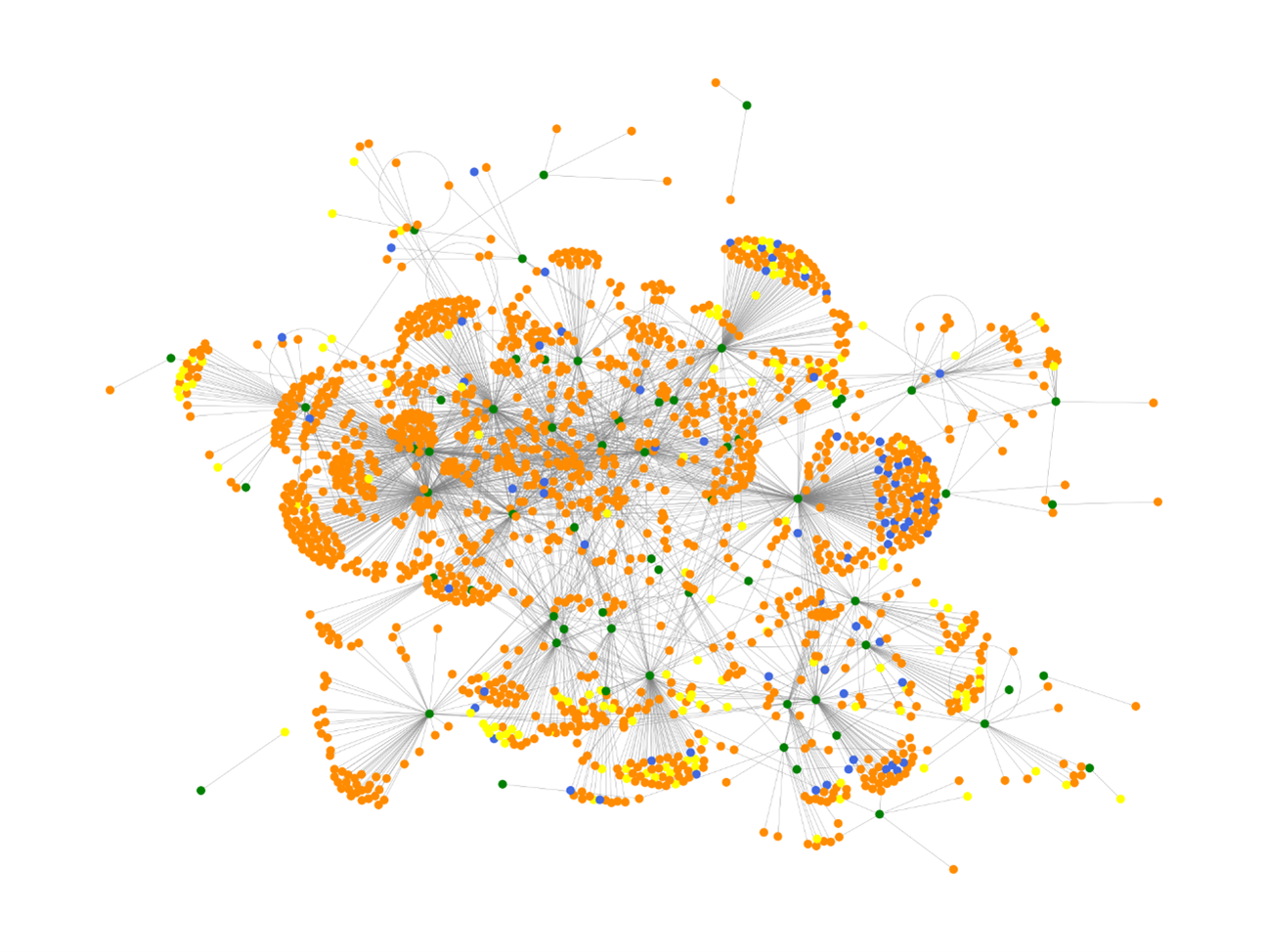

シンプルな形から複雑な形態まで、機械知能が人間の集団の中で与える影響は大きくなっています。集団行動問題の観点からみると、このような関与はかえって意図せずして、協力などの人間の既存の有益な社会規範を抑制してしまう可能性があります。我々は、オンライン参加者(N = 2人1組で150組の計300人)が参加する協調ゲームにおいて遠隔操作でロボット車両を運転するという新しいサイバーフィジカル室内実験を行い、理論的な予測を検証しました。その結果、自動ブレーキアシストが他者に道を譲るなどの人間の利他的な行動を増やし、またコミュニケーションが相互の譲歩を促進させることがわかっています。一方で、自動操縦アシスト機能は自己利益の最大化を優先させ、人々の相互利益の形成を完全に阻害してしまいました。興味深いことに、この社会的影響は、アシストシステムが使用されなくなった後も観測されました。また、コミュニケーション機能を導入しても、自動操縦アシストがある条件では人々はほとんどコミュニケーションを取らないため、互恵性行動の現象は緩和されませんでした。我々の発見は、積極的な安全支援装置(AIによる単純な操縦アシスト)が、個人の安全と社会的互恵性の間のトレードオフに影響を与えることを含め、人々の間の社会的協調のダイナミクスを変化させる可能性があることを示唆しています。自動ブレーキと自動操縦の違いは、社会的協調のジレンマの中でAI支援技術が人間の主体性を保持しつつ支援するのか、あるいは完全に代替するのかに関係があるようです。人間は集団の課題に対処するために互恵性行動の規範を発展させてきましたが、AIを含む機械知能が規範を持たずに人間の意思決定に関与する状況では、そのような黙示の了解が崩れる可能性があることを示唆しています。

論文

Shirado, Hirokazu, Shunichi Kasahara, and Nicholas A. Christakis. 2023. “Emergence and Collapse of Reciprocity in Semiautomatic Driving Coordination Experiments with Humans.” PNAS, Proceedings of the National Academy of Sciences of the United States of America 120 (51): e2307804120.

https://www.pnas.org/doi/10.1073/pnas.2307804120

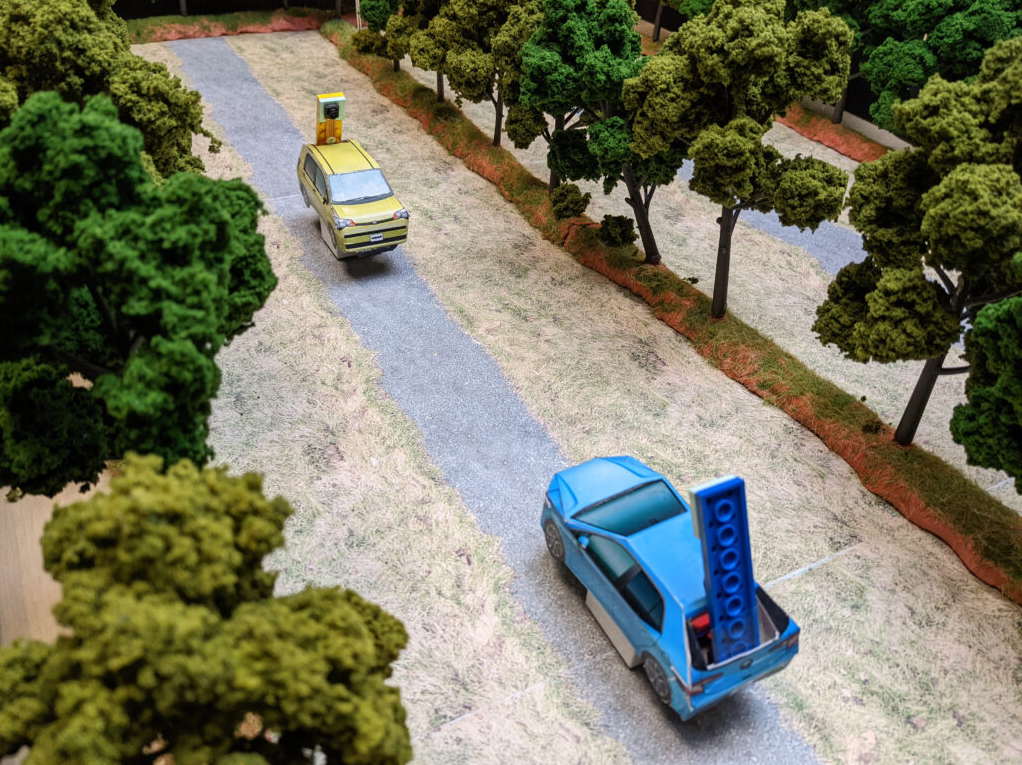

このプロジェクトは、人間と機械を組み合わせたハイブリッドシステムにおける社会規範に関するものです。コンピュータによるアシストが社会規範にどのような影響を与えるかを調査するため、リアルタイムのビデオ・ストリーミングを備えたサイバーフィジカル室内実験システムを作り、協力ゲームの中でオンラインでの参加者が遠隔操作で物理的なロボット車両を運転します。(N = 2人1組で150組の計300人)

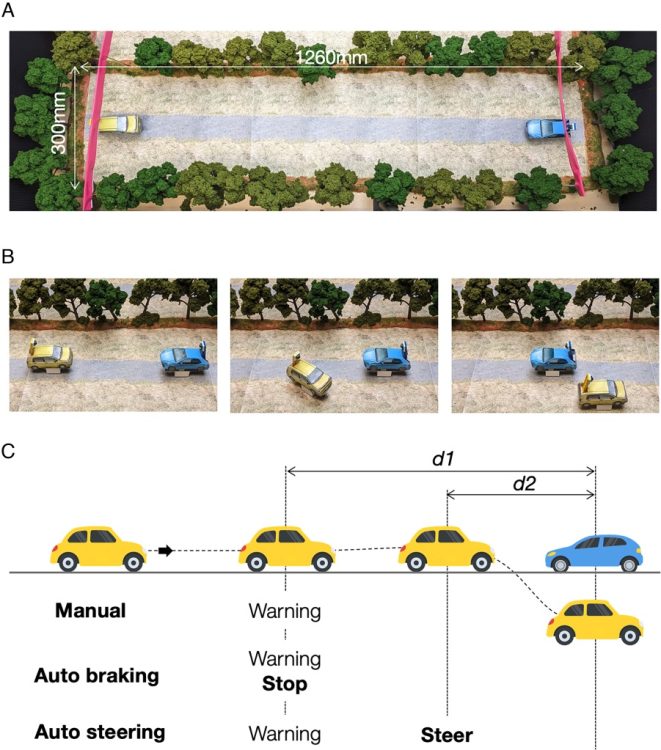

(A) 実験設定 (A) 物理的な協調空間。2台の自動車ロボットが1本の道路上で向かい合っている。プレイヤーはインターネット上でロボットを遠隔操作し、速度や、道路上を走行するかしないかを操作する。(B) 片側の黄色い車のみが曲がる一連の動作。。衝突を避けるには少なくともどちらかのプレイヤーが道を譲る必要があるが、その場合走行速度は75%低下する(報酬も低下する)。(C) 運転システムの実験的処理。デフォルトの手動運転に加えて、自動ブレーキ付きの車は警告とともに自動的に一旦停止し、自動操舵付きの車は直前に自動的にハンドルを切る。

自動ブレーキのアシストによって、道を譲り相互に譲歩するなどといった人間の利他的な行動が高まることが示されました。しかし、自動操舵支援は人と人との間の互恵性を完全に抑制してしまいます。下記の動画は互恵性がないパターンの例。

双方の車両が曲がるのをラウンドごとに俯瞰した映像。このサンプルセッションは、通信機能付きの自動操舵の条件下で行われた。上記のアニメーションは、車両の動き、参加者のコミュニケーションメッセージとそのタイミング(ただしこの例では参加者はメッセージを送信していない)、アシストシステムの作動タイミング(警告と自律緊急操舵アシスト等)など分析に使用した記録情報を示している。ロボット車両はラウンドの合間に自動的にスタート位置に戻った。

互恵性パターンと非互恵性パターンの違いは、社会的協調のジレンマにおいてテクノロジーが「人間の主体性」をサポートするか代替するかによると見受けられます。これはハイブリッドシステムにおける主体性が社会的協調に影響を与えうることを示唆しています。 下記の動画は互恵性パターン

片側の車両のみが曲がるのをラウンドごとに俯瞰した映像。このサンプルセッションは、通信機能付きの手動条件下で行われた。上記のアニメーションは、車両の動き、参加者のコミュニケーションメッセージとそのタイミング、アシストシステムの作動タイミング(注:この例の参加者は警告システムを作動させなかった)など、分析に使用した記録情報を示している。ロボット車両は、ラウンドの合間に自動的にスタート位置に戻った。

Keywords

Members

Related News

同じリサーチエリアの別プロジェクト

Human Augmentation

Wi-Fi の電波で現在位置を推定するサービスの提供

生物間相互作用データセットをオープンデータとして公開しました

ホームポジションから指を離さずにタッチパッド操作する入力技術

ロボット義足